Alongside has large strategies to break adverse cycles before they turn professional, stated Dr. Elsa Friis, a licensed psychologist for the firm, whose background consists of recognizing autism, ADHD and suicide threat utilizing Big Language Designs (LLMs).

The Alongside application presently partners with more than 200 colleges throughout 19 states, and collects pupil chat information for their yearly youth psychological health and wellness report — not a peer examined publication. Their searchings for this year, stated Friis, were unusual. With almost no reference of social networks or cyberbullying, the pupil individuals reported that their many pushing concerns involved feeling bewildered, inadequate rest practices and connection troubles.

Along with boasts positive and insightful information factors in their report and pilot research study conducted previously in 2025, however specialists like Ryan McBain , a health and wellness scientist at the RAND Company, stated that the data isn’t robust adequate to recognize the real implications of these kinds of AI mental wellness tools.

“If you’re mosting likely to market an item to millions of youngsters in adolescence throughout the United States with school systems, they need to satisfy some minimum typical in the context of actual rigorous trials,” stated McBain.

But beneath all of the record’s data, what does it really mean for students to have 24/ 7 access to a chatbot that is developed to address their psychological wellness, social, and behavioral worries?

What’s the distinction in between AI chatbots and AI buddies?

AI friends drop under the larger umbrella of AI chatbots. And while chatbots are coming to be more and more sophisticated, AI friends are distinct in the manner ins which they engage with individuals. AI companions have a tendency to have less integrated guardrails, indicating they are coded to constantly adjust to individual input; AI chatbots on the other hand could have much more guardrails in place to keep a discussion on track or on subject. For instance, a repairing chatbot for a food distribution company has specific instructions to bring on discussions that only pertain to food delivery and app issues and isn’t developed to stray from the subject since it doesn’t understand exactly how to.

Yet the line between AI chatbot and AI buddy becomes obscured as a growing number of individuals are utilizing chatbots like ChatGPT as a psychological or restorative appearing board The people-pleasing attributes of AI buddies can and have come to be an expanding problem of concern, especially when it involves teenagers and various other vulnerable people who utilize these friends to, sometimes, verify their suicidality , delusions and undesirable dependence on these AI buddies.

A current report from Common Sense Media broadened on the unsafe results that AI friend usage has on teenagers and teens. According to the report, AI systems like Character.AI are “developed to mimic humanlike communication” in the form of “online close friends, confidants, and even specialists.”

Although Good sense Media found that AI buddies “position ‘inappropriate dangers’ for individuals under 18,” youths are still using these platforms at high rates.

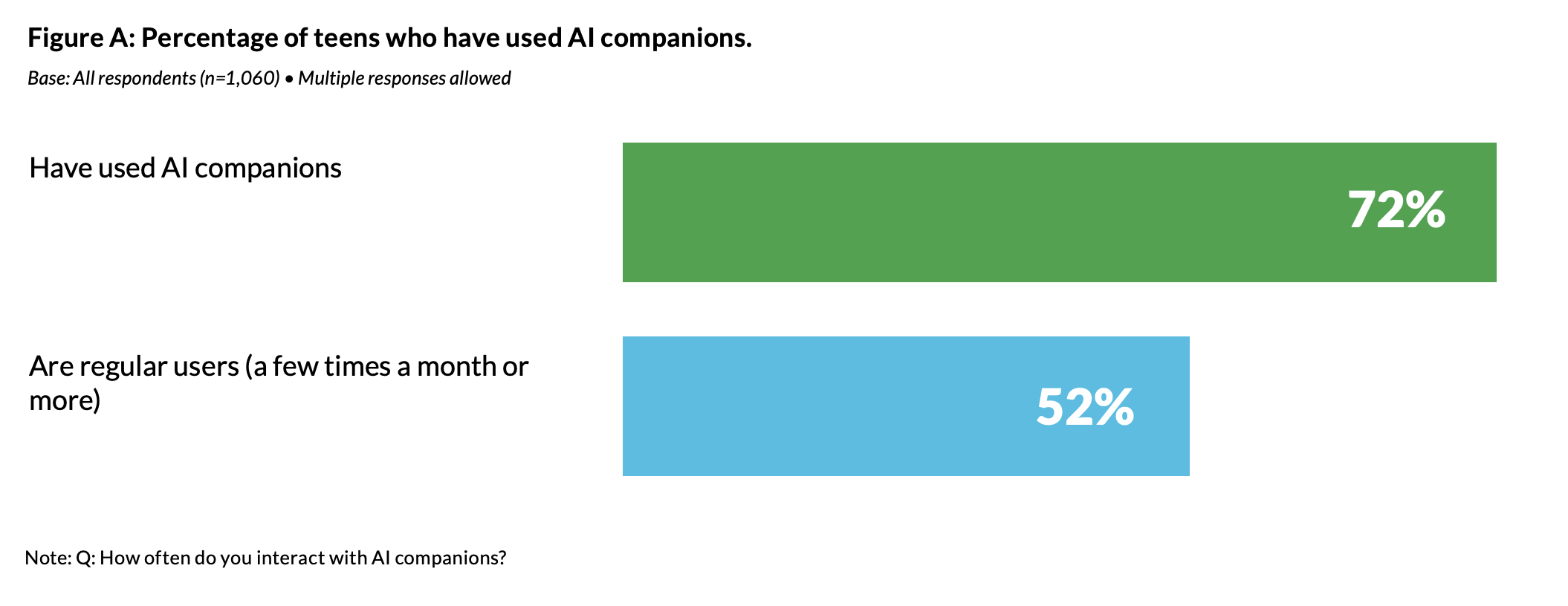

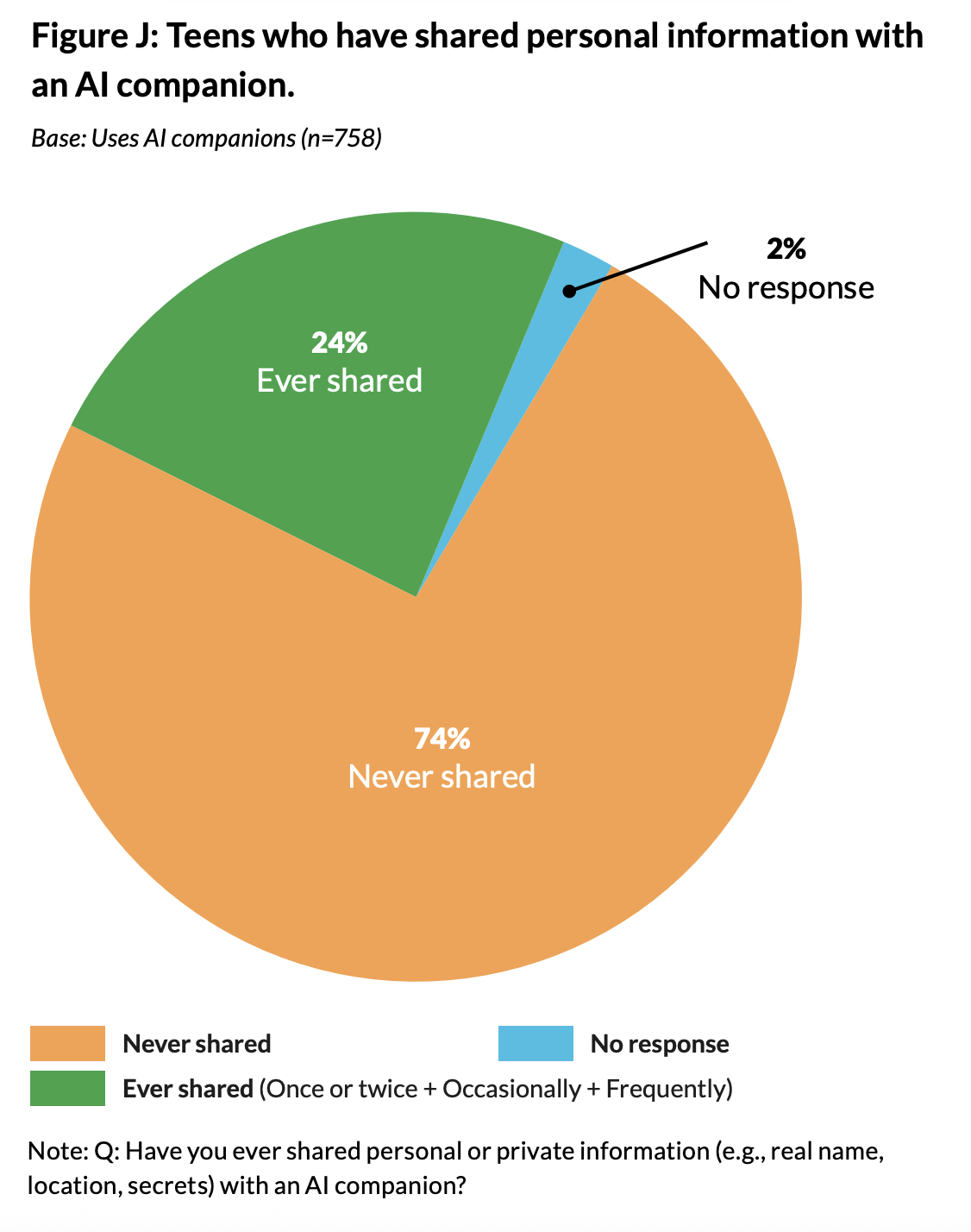

Seventy 2 percent of the 1, 060 teens checked by Good sense claimed that they had made use of an AI buddy in the past, and 52 % of teens surveyed are “normal individuals” of AI companions. Nonetheless, generally, the record found that the majority of teens worth human relationships greater than AI companions, do not share individual details with AI companions and hold some level of suspicion towards AI companions. Thirty nine percent of teenagers checked also said that they use skills they experimented AI friends, like expressing emotions, saying sorry and defending themselves, in the real world.

When comparing Common Sense Media’s suggestions for safer AI use to Alongside’s chatbot attributes, they do fulfill some of these suggestions– like crisis intervention, usage restrictions and skill-building components. According to Mehta, there is a huge difference in between an AI friend and Alongside’s chatbot. Alongside’s chatbot has integrated safety functions that require a human to review particular discussions based upon trigger words or worrying expressions. And unlike tools like AI companions, Mehta proceeded, Together with prevents trainee customers from talking way too much.

Among the biggest challenges that chatbot designers like Alongside face is mitigating people-pleasing tendencies, said Friis, a defining attribute of AI friends. Guardrails have been put into place by Alongside’s team to prevent people-pleasing, which can turn sinister. “We aren’t mosting likely to adapt to foul language, we aren’t mosting likely to adjust to bad practices,” said Friis. Yet it depends on Alongside’s group to expect and figure out which language comes under harmful classifications consisting of when trainees attempt to make use of the chatbot for cheating.

According to Friis, Together with errs on the side of caution when it involves identifying what kind of language comprises a concerning declaration. If a chat is flagged, educators at the companion college are pinged on their phones. In the meanwhile the student is triggered by Kiwi to finish a crisis assessment and guided to emergency service numbers if required.

Dealing with staffing scarcities and source spaces

In college setups where the ratio of trainees to institution counselors is typically impossibly high, Alongside serve as a triaging device or intermediary between trainees and their trusted grownups, stated Friis. As an example, a conversation between Kiwi and a trainee may contain back-and-forth repairing about producing healthier resting habits. The pupil may be motivated to talk to their parents about making their space darker or including a nightlight for a much better rest environment. The student might then come back to their chat after a discussion with their moms and dads and tell Kiwi whether or not that solution worked. If it did, after that the conversation wraps up, yet if it really did not then Kiwi can suggest various other potential services.

According to Dr. Friis, a couple of 5 -minute back-and-forth conversations with Kiwi, would certainly equate to days if not weeks of conversations with a college counselor that has to prioritize pupils with one of the most extreme concerns and requirements like repeated suspensions, suicidality and dropping out.

Making use of digital innovations to triage wellness problems is not an originality, claimed RAND scientist McBain, and indicated doctor wait rooms that welcome individuals with a wellness screener on an iPad.

“If a chatbot is a slightly much more vibrant user interface for collecting that kind of details, then I think, theoretically, that is not a problem,” McBain proceeded. The unanswered inquiry is whether or not chatbots like Kiwi perform far better, too, or worse than a human would, yet the only means to contrast the human to the chatbot would certainly be via randomized control tests, stated McBain.

“One of my most significant worries is that companies are entering to attempt to be the initial of their kind,” said McBain, and at the same time are decreasing safety and high quality standards under which these firms and their scholastic companions distribute optimistic and distinctive results from their product, he continued.

But there’s mounting pressure on college counselors to meet trainee requirements with limited sources. “It’s really tough to develop the area that [school counselors] wish to create. Counselors intend to have those communications. It’s the system that’s making it truly hard to have them,” said Friis.

Alongside uses their college partners expert growth and consultation services, as well as quarterly summary records. A lot of the time these services focus on product packaging information for give proposals or for providing engaging info to superintendents, said Friis.

A research-backed technique

On their website, Together with promotes research-backed methods made use of to create their chatbot, and the firm has actually partnered with Dr. Jessica Schleider at Northwestern College, who studies and establishes single-session mental health and wellness interventions (SSI)– mental wellness interventions made to attend to and supply resolution to psychological health problems without the expectation of any follow-up sessions. A common counseling treatment goes to minimum, 12 weeks long, so single-session interventions were interesting the Alongside group, however “what we know is that no item has ever before had the ability to really effectively do that,” claimed Friis.

However, Schleider’s Lab for Scalable Mental Health and wellness has released several peer-reviewed tests and medical research demonstrating positive outcomes for implementation of SSIs. The Lab for Scalable Mental Health additionally provides open source materials for moms and dads and experts thinking about applying SSIs for teens and youngsters, and their initiative Task YES offers cost-free and anonymous on-line SSIs for youth experiencing psychological wellness problems.

“One of my greatest concerns is that business are entering to try to be the very first of their kind,” said McBain, and at the same time are decreasing security and top quality standards under which these companies and their scholastic partners circulate optimistic and attractive arise from their product, he continued.

What takes place to a youngster’s data when making use of AI for psychological health interventions?

Alongside gathers student data from their conversations with the chatbot like state of mind, hours of rest, workout behaviors, social behaviors, on-line communications, among other things. While this data can use colleges understanding into their pupils’ lives, it does raise inquiries concerning trainee surveillance and data privacy.

Together with like lots of other generative AI devices makes use of various other LLM’s APIs– or application shows user interface– meaning they include another business’s LLM code, like that used for OpenAI’s ChatGPT, in their chatbot programs which processes chat input and creates conversation result. They likewise have their very own internal LLMs which the Alongside’s AI group has actually created over a couple of years.

Expanding worries concerning exactly how individual information and individual details is saved is especially relevant when it concerns sensitive pupil information. The Alongside team have opted-in to OpenAI’s no data retention plan, which means that none of the trainee information is saved by OpenAI or various other LLMs that Alongside makes use of, and none of the data from chats is utilized for training objectives.

Since Alongside operates in colleges throughout the united state, they are FERPA and COPPA compliant, but the data needs to be saved somewhere. So, student’s personal identifying information (PII) is uncoupled from their conversation data as that details is kept by Amazon Web Provider (AWS), a cloud-based sector requirement for exclusive data storage by technology companies all over the world.

Alongside uses a file encryption process that disaggregates the pupil PII from their chats. Only when a discussion obtains flagged, and requires to be seen by people for security factors, does the pupil PII link back to the conversation concerned. On top of that, Alongside is called for by legislation to save pupil conversations and details when it has notified a situation, and parents and guardians are free to request that info, stated Friis.

Usually, adult permission and student information policies are done through the school partners, and similar to any college solutions offered like counseling, there is an adult opt-out alternative which need to adhere to state and district standards on adult approval, claimed Friis.

Alongside and their institution companions placed guardrails in place to make sure that student information is kept safe and confidential. Nonetheless, information violations can still take place.

How the Alongside LLMs are educated

Among Alongside’s internal LLMs is utilized to recognize potential crises in student chats and notify the essential adults to that dilemma, said Mehta. This LLM is educated on pupil and synthetic outputs and search phrases that the Alongside team goes into by hand. And since language changes often and isn’t always direct or conveniently well-known, the team keeps a continuous log of different words and expressions, like the prominent acronym “KMS” (shorthand for “kill myself”) that they retrain this particular LLM to recognize as dilemma driven.

Although according to Mehta, the process of by hand inputting data to train the crisis assessing LLM is just one of the most significant efforts that he and his team has to tackle, he doesn’t see a future in which this process could be automated by one more AI device. “I wouldn’t fit automating something that might set off a dilemma [response],” he claimed– the preference being that the professional team led by Friis contribute to this process with a scientific lens.

Yet with the possibility for rapid development in Alongside’s number of college companions, these processes will certainly be extremely hard to stay on top of by hand, claimed Robbie Torney, elderly supervisor of AI programs at Good sense Media. Although Alongside emphasized their process of including human input in both their situation response and LLM development, “you can not always scale a system like [this] quickly since you’re mosting likely to run into the demand for more and more human testimonial,” proceeded Torney.

Alongside’s 2024 – 25 record tracks problems in trainees’ lives, yet doesn’t identify whether those conflicts are taking place online or personally. But according to Friis, it does not truly matter where peer-to-peer dispute was occurring. Ultimately, it’s most important to be person-centered, stated Dr. Friis, and continue to be concentrated on what really matters per specific student. Alongside does offer positive skill structure lessons on social media safety and security and digital stewardship.

When it comes to rest, Kiwi is configured to ask trainees concerning their phone behaviors “due to the fact that we know that having your phone in the evening is just one of the important things that’s gon na maintain you up,” stated Dr. Friis.

Universal psychological wellness screeners available

Along with also provides an in-app global mental health screener to college companions. One district in Corsicana, Texas– an old oil town located beyond Dallas– found the data from the universal psychological health screener invaluable. According to Margie Boulware, executive supervisor of special programs for Corsicana Independent Institution Area, the community has had issues with gun violence , yet the area really did not have a method of checking their 6, 000 pupils on the psychological health impacts of stressful events like these till Alongside was introduced.

According to Boulware, 24 % of trainees evaluated in Corsicana, had a relied on grown-up in their life, 6 percent factors fewer than the standard in Alongside’s 2024 – 25 record. “It’s a little surprising exactly how few kids are stating ‘we actually feel connected to a grown-up,'” said Friis. According to research , having actually a trusted adult assists with young people’s social and emotional health and well-being, and can likewise counter the results of negative childhood experiences.

In an area where the school area is the biggest company and where 80 % of trainees are financially disadvantaged, psychological wellness resources are bare. Boulware attracted a correlation between the uptick in weapon violence and the high portion of pupils who claimed that they did not have actually a trusted adult in their home. And although the data provided to the area from Alongside did not directly correlate with the physical violence that the neighborhood had actually been experiencing, it was the first time that the area was able to take a more extensive check out student psychological wellness.

So the area developed a job pressure to deal with these problems of boosted gun physical violence, and decreased mental health and belonging. And for the very first time, rather than needing to presume the amount of pupils were fighting with behavioral issues, Boulware and the task pressure had representative data to build off of. And without the global screening study that Alongside delivered, the area would certainly have stayed with their end of year comments survey– asking inquiries like “How was your year?” and “Did you like your educator?”

Boulware thought that the global screening survey urged trainees to self-reflect and respond to questions much more honestly when compared to previous comments surveys the area had performed.

According to Boulware, pupil resources and psychological health and wellness resources in particular are scarce in Corsicana. However the district does have a team of therapists including 16 academic therapists and six social emotional counselors.

With insufficient social psychological therapists to go around, Boulware said that a great deal of rate one trainees, or pupils that do not need normal one-on-one or group academic or behavioral treatments, fly under their radar. She saw Alongside as a quickly obtainable device for trainees that provides discrete coaching on mental health and wellness, social and behavior problems. And it likewise uses teachers and administrators like herself a peek behind the curtain right into student psychological health.

Boulware commended Alongside’s positive attributes like gamified ability building for trainees who fight with time administration or job organization and can gain points and badges for completing particular skills lessons.

And Alongside fills up a crucial void for staff in Corsicana ISD. “The amount of hours that our kiddos are on Alongside … are hours that they’re not waiting outside of a trainee assistance counselor workplace,” which, as a result of the reduced ratio of therapists to students, enables the social psychological counselors to concentrate on pupils experiencing a crisis, claimed Boulware. There is “no chance I might have allotted the sources,” that Alongside offers Corsicana, Boulware included.

The Along with application requires 24/ 7 human surveillance by their institution partners. This means that marked educators and admin in each area and college are assigned to obtain informs all hours of the day, any day of the week including throughout vacations. This feature was a concern for Boulware initially. “If a kiddo’s battling at 3 o’clock in the morning and I’m asleep, what does that look like?” she stated. Boulware and her group had to really hope that an adult sees a crisis sharp really swiftly, she proceeded.

This 24/ 7 human surveillance system was checked in Corsicana last Xmas break. An alert was available in and it took Boulware ten mins to see it on her phone. Already, the pupil had actually already started dealing with an assessment study prompted by Alongside, the principal who had seen the sharp before Boulware had actually called her, and she had obtained a text from the student support council. Boulware was able to call their local chief of police and deal with the situation unraveling. The pupil had the ability to get in touch with a therapist that exact same mid-day.